What's the word for this phenomenon?

Here’s something I’ve encountered a few times: I’m presented with an argument about something, and I logically/consciously believe it’s true, but I don’t emotionally believe it’s true. Is there a word for this phenomenon?

And, what’s going on with it? It seems pretty weird for a bunch of reasons…

In Kahneman’s system 1/2 terminology, it feels like it’s different than many of their classic examples where the fast and heuristic based system 1 gets something wrong, but given time and reflection, system 2 gets it right. The thing I’m describing seems like a case where system 1 says the wrong thing, system 2 thinks about it, realizes it’s wrong, and then says “Yeah, I think I’m still gonna go with system 1’s answer.”

I’ve noticed that the feeling often comes up for me when I’m learning math. It’s actually a strong signal for myself whether I really understand some proof. Sometimes, I go through a proof, and I can’t deny each step is true, so I consciously have to admit that it’s true… but I don’t feel the truth, and as a result, I’m mentally on shaky ground for anything that relies on it. OTOH, sometimes a proof makes sense to me in a way that really does feel like it couldn’t be any other way and has to be true.

But even with the proofs cases, it’s weird! For example, there are a bunch of fun little (incorrect) “proofs” where you get a result that $0 = 1$ or something (usually relying on some little trick like dividing by zero on both sides and hoping you don’t notice). I remember being shown them when I was a kid and thinking “okay, I know it’s BS, even though I can’t spot the trick”. And it seems good that that instinct is built in!

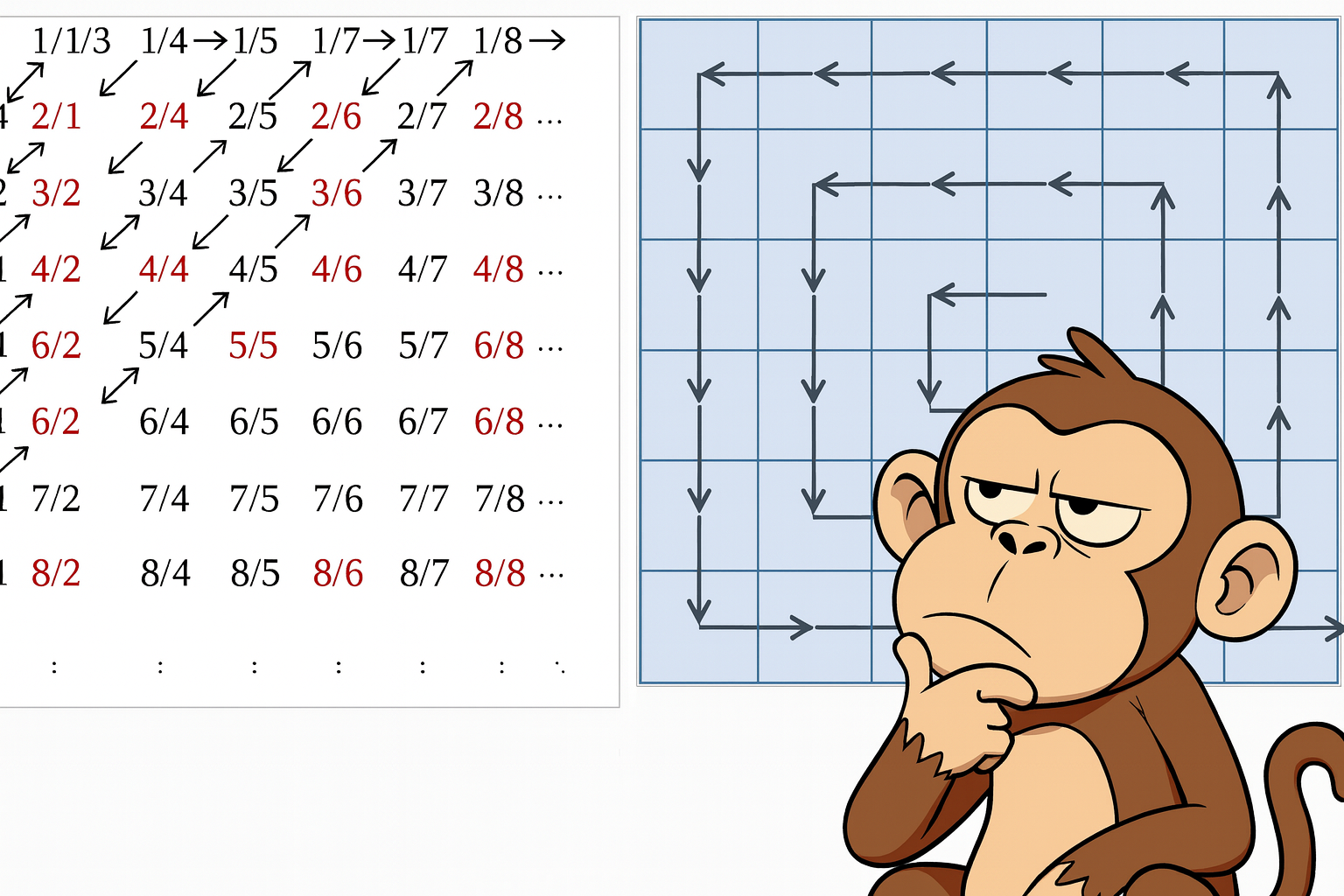

But on the flip side, there are some proofs that basically set off that BS alarm… and then are true! The Banach-Tarski paradox is one. Issues with sizes of infinities like Hilbert’s Hotel and such have always done that to me too, even though I know and “understand” the proofs behind them. So in that case, if we trusted our BS meters and shut off the reasoning part, we’d be led astray.

I’m wondering if it’s kind of a “meta strategy”, or a defense mechanism. Here’s what I mean: I think humans generally use a strategy that involves truth seeking as part of it (since believing wrong things can be bad for survival), and an extension of that is reasoning and deduction (since it helps turn true things into more true things). So there’s pressure for our minds to be open to a good argument, which would change our behavior and make us more likely to survive.

But we probably want some sort of “escape hatch” for this. For example, there are certain beliefs you have that you probably don’t want someone to be able to convince you of. It’d be a pretty big bug in our brain software if a really smart person could make the argument to you “actually, you should eat your children! Here’s why”, and because they’re so much smarter and you can’t figure out how to refute it, you have no choice but to follow through on it.

Getting very handwavy, it seems like there’s a bias-variance tradeoff here, in ML terms. On some level, always following the facts and reasoning should lead to the truth, given enough time and update opportunities. So in the $0 = 1$ example above, you could just believe it as presented and be incorrect for some time, and if you kept absorbing more info and processing it, it should get fixed eventually. But of course in reality we don’t have infinite time or retries, so it seems like in some cases it’s worth taking on a bunch of bias to lower the variance, and evolution has chosen a reasonably stable middle ground for us. “The market can remain irrational longer than you can remain solvent”, etc.

Is there already a word for this though? I searched a bit and asked the LLM, but I haven’t found any great match. GPT gave a couple options like:

- “Belief–emotion dissociation — your propositional (cognitive) belief updates, but your emotional/affective system doesn’t.”

- “Cognitive–affective dissonance — a form of dissonance between what you judge to be true and how you feel.”

- “Intellectual assent without conviction — informal but precise: you agree intellectually, not viscerally.”

Those are all… reasonable descriptions of what I’m talking about here, but A) they’re not really a “word”, more of a psychological-term-soup, and B) I googled them and they don’t seem to be standard concepts.

However, it also reminded me about the concept of akrasia, which feels a lot closer in spirit to what I’m getting at. I.e., you “believe” some choice is the superior action (the way you might consciously believe an argument), but you’re not doing that action. But then, do you actually believe that it’s the superior action/correct argument? Part of the whole akrasia debate is that some would say you don’t actually think it’s the right action given your values, and likewise they might say that you don’t really consciously get the argument.